The title for this activity is Preprocessing Text. The lessons we learn in the activity is very useful in handwriting recognition where individual letters must be extracted.

We were given the task of extracting a group of text from a scanned image. We rotated the image by using mogrify(), a function in Scilab that can do almost anything that the Free Transform tool in Photoshop can do (although I favor the latter). We removed the lines using the techniques used in the moon photo and the canvas weave in the previous filtering activity, binarized and threshold the image, and then labeled using bwlabel().

This activity pretty much summarizes everything we learned so far, thus it's vital to show the resulting image for each step. Apparently, mogrify() returns a stack problem in my pc, causing SIP to go FUBAR.. I had no other choice but to make use of the Free Transform tool in photoshop.

By looking at the Fourier Domain of our image, we can get an idea on the type of filter we'll need. For this case, it's pretty much like the moon photo except this time, the lines are horizontal. From the Fourier Domain of the image as shown below, the best filter is a vertical line masking the white vertical line, but taking care not to touch the point at the center. Touching that point will result in a great loss of information.

The following sets of images are the images taken before (L) and after (R) the closing operator was used. We can note that the noisy signals inside the letters were significantly reduced.

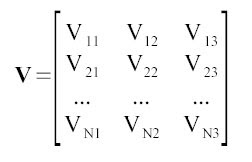

Finally, here's the image with indexed values for each blob. Imaged in hotcolormap. xD

I'd like to acknowledge Neil for helping me out with this activity, especially since I needed to do it again.. all thanks to the recent USB disk loss. I'd also like to thank Hime for her support, and for keeping me awake last night through all the blog posts I needed to write. The post date might lie, but this is actually the last blog post I made in time for the deadline.

I get a 9 for this activity. Probably for the effort in trying to recover the lost files.